Past programming languages and their influences on today's languages and programming paradigms

Blog

I would like thank Dr. Ronald Mak for giving us a chance to post a report on ETHW website.

I was able to understand more about the history of computing technologies we use today and appreciate it.

2011/10/05

- Created topic.

2011/10/17

- Read and researched on several programming paradigms such as functional, oop, logic and etc.

2011/11/13

- Read and researched on programming paradigms and history of programming languages.

- Wrote the first draft.

- Need to research more on exception and error handling techniques in old programming languages and modern programming languages.

2011/11/25

- Read and researched on error handling techinques in old programming languages and modern programming languages.

2011/12/11

- Wrote the final draft.

Introduction

There are many different programming languages, but most are limited to a few paradigms. This paper first tries to define the concepts of paradigm and language, and then briefly surveys the history of programming languages to better understand how programming paradigms work. The most influential paradigms are then examined in chronological order, as well as multi-paradigms and a few others. In addition, the paper discusses what causes paradigm shift and what attempts have been made through history to make software more reliable, especially in terms of error and exception handling. Lastly, some conclusions are presented.

The History of Programming Languages

A programming language is a notational system for describing computing tasks in both a machine- and human-readable form. It is based on rules of syntax and semantics. Each programming language has a particular style of notation.

The word “paradigm” comes from the Greek word “paradeigma,” and generally refers to a category of entities sharing a common characteristic. By implication, a programming paradigm is a fundamentally common style of computing.

Initially, all programming languages began as attempts to find an efficient way to convert human language into machine code. They then evolved into languages that had a high level of abstraction, hid the hardware details, and enabled the use of representations that made it easier for human programmers to understand them. Later, as problems became more complex and programs became larger and more sophisticated, object-oriented and even driven programming languages were introduced to suggest new ways for solving such problems and to meet new demands.

It may be helpful to understand the history of programming languages before discussing current programming paradigms. In the 1940s, programs, including all instructions and memory locations, were written in machine-level programming languages in binary form (0s and 1s), and memory had to be manually moved around. Programming in binary code was tedious and error prone, and the programs were not easy for humans to read, write, or debug. In addition, a simple algorithm resulted in a lengthy code, proving the need for a mnemonic code to represent operations.

To mitigate the difficulty of writing in binary, symbolic programming languages like assembly language were introduced In the 1950s that used symbolic notation to represent machine language commands. As a result, programmers could assign meaningful names to computer instructions. A program using such names was converted into machine code via an assembler, a program that translated the names into the binary instructions understood by the computer. However, although assembly language was an improvement over machine language, it still required the programmer to think on the machine's level. Because extensive knowledge of the CPU and the machine’s instruction set was required, it was easy to make mistakes. In addition, assembly or machine code lacked portability and had a high dependence on hardware, preventing it from running on different computers. Thus, there was a need for machine independence and greater ease of use.

Programming languages quickly evolved to make programming easier and less error prone. The forms that arose were considered high-level languages because the program statements were not closely related to the internal characteristics of the computer, making it possible for people to write programs using familiar terms instead of difficult machine instructions. These high-level programming languages included COBOL, FORTRAN, BASIC, and C. Most of these languages had compilers, which improved speed, and introduced abstract ideas so that programmers could concentrate on finding the solution to a problem rather than on the low-level details of data representation. This marked the shift to the “procedural” paradigm from the “low-level” programming paradigm. These procedural languages used a step-by-step approach. In addition, they enabled portability and could run on different machines.

Next, programmers sought to find an even more logical approach to creating programs, prompting the birth of the "object-oriented" programming paradigm. This paradigm attempts to abstract modules of a program into reusable objects and includes programming languages like Java and Smalltalk.

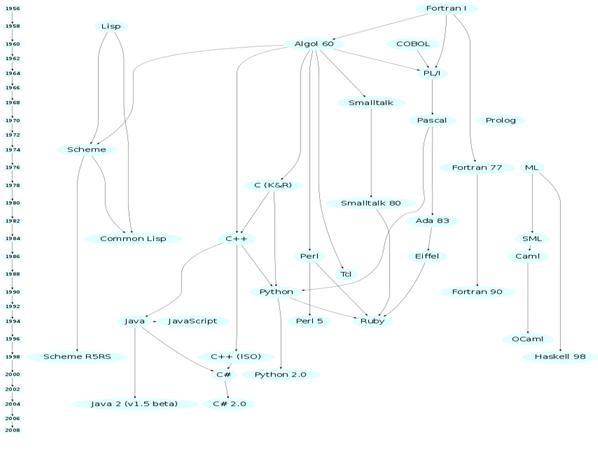

The diagram below shows the main programming languages in chronological order.

Programming Paradigms

The most influential programming paradigms include the procedural (also known as the imperative), structured, functional, logic, and object-oriented paradigms.

The Procedural (Imperative) Programming Paradigm

The traditional model of computation, the procedural programming paradigm specifies a list of operations that the program must complete to reach its final goal. It describes the steps that change the computer’s state of memory by providing statements such as assignment statements. This paradigm creates procedures, functions, subroutines, or methods by splitting tasks into small pieces, thus allowing a section of code to be reused in the program to some extent and making it easier for programmers to understand and maintain the program structure. However, it is still difficult to solve problems, especially when they are large and complicated, since procedural programming languages are not particularly close to the way humans think or reason. Procedural programs are difficult to maintain and it is not easy to reuse the code when the program is large and has many procedures or functions. Moreover, if a modification must be made in one of its states or conditions, it is difficult and time consuming to do so. These drawbacks make using this paradigm very expensive. Procedural programming languages include Algol, FORTRAN, COBOL, and BASIC.

The Structured Programming Paradigm

The structured programming paradigm can be seen as a subset of the procedural programming paradigm. Its characteristics include removing or reducing the use of global variables, relying on the GOTO statement, and introducing variables local to blocks such as procedures, functions, subroutines, or methods, which result in variables declared inside a block that are invisible outside it. The structured programming paradigm is often associated with the top-down approach. This approach first decomposes the problem into smaller pieces. These pieces are further decomposed, finally creating a collection of individual problems. Each problem is then solved one at a time. Though this approach is successful in general, it causes problems later when revisions must be made. Because each change requires modifying the program, this approach minimizes the reuse of code or modules. The structured programming paradigm includes languages such as Pascal and C.

The Functional Programming Paradigm

The functional programming paradigm was created to model the problem rather than the solution, thus allowing the programmer to take a high-level view of what is to be computed rather than how. In this paradigm, the program is actually an expression that corresponds to the mathematical function f. Thus, it emphasizes the definition of functions instead of the execution of sequential list of instructions.

The following is the factorial function written in a language called Lisp:

(defun factorial (n) (if (<= n 1) 1 (∗ n (factorial (− n 1)))) )

In the above example, the factorial of n is defined as 1 if n <= 1, else it is n ∗ factorial (n − 1). It does not list the steps to calculate the factorial. The functional programming paradigm does not have a notion of state, allows no sequencing, consists of functions that only take inputs and produce outputs, does not have an internal state that affects the output produced for a given input, and permits functional solutions to problems by allowing a programmer to treat functions as first-class objects.

Because each function is designed to accomplish a specific task given its arguments while not relying on an external state, the functional programming paradigm increases readability and maintainability. However, since this paradigm corresponds less closely to current hardware such as the Von Neumann Architecture, it can be less efficient, and its time and space usage can be hard to justify. Also, some things are harder to fit into a model in which functions only take inputs and produce outputs. The functional programming paradigm includes languages such as Lisp and Scheme.

The Logic Programming Paradigm

Instead of specifying instructions on a computer, the logic programming paradigm enables the expression of logic. It is therefore useful for dealing with problems where it is not obvious what the functions should be. In this paradigm, programmers specify a set of facts such as statements or relationships that are held to be true, and a set of axioms or rules (i.e., if A is true, then B is true), and use queries to the execution environment to see whether certain relationships hold and to determine the answer by logical inference. This paradigm is popular for database interfaces, expert systems, and mathematical theorem provers.

In the following example, we declare the facts about some domain. We can then make queries about these facts—for example, are ryan and brian siblings?

sibling(X,Y) :− parent(Z,X), parent(Z,Y) parent(X,Y) :− father(X,Y) parent(X,Y) :− mother(X,Y) mother(megan, brian). father(ryan, brian) father(ryan, molly) father(mike, ryan)

Under the logic programming paradigm fall languages such as Prolog.

The object-Oriented Programming Paradigm

Object-oriented programming is the newest and the most prevailing paradigm. It suggests new ways of thinking for problem-solving, since its techniques more closely model the way humans solve problems. Traditionally, a program has been viewed as a logical procedure that takes input. processes it, and generates output. By contrast, the object-oriented programming paradigm focuses on modeling problems in terms of entities called objects that have attributes and behaviors and that interact with other entities using message passing.

The key characteristics of object-oriented programming include class, abstraction, encapsulation, inheritance, and polymorphism. A class is a template or prototype from which objects are created that contains variables and methods, and that specifies a user-defined data type. Abstraction separates the interface from implementation. Encapsulation insulates the data by wrapping them up using various methods, and allows the internal implementation of a class to be shared by specifying what information in an object can be exchanged with others. Inheritance enables hierarchical relationships to be represented and refined. Polymorphism allows objects of different types to receive the same message and respond in different ways.

The object-oriented programming paradigm has many benefits over traditional ones. Since it emphasizes modular code through the abstraction and encapsulation concepts, facilitates a disciplined software development process, enables building secure programs through the data-hiding concept, and eliminates redundant code and defines new classes from existing ones with little effort through inheritance, it creates enhanced reusability, extensibility, reliability and maintainability. The object-oriented programming paradigm includes languages like Smalltalk and Eiffel.

Other Paradigms

The Concurrent Programming Paradigm

On single-core architectures, this paradigm is traditionally implemented by means of processes or threads spawned by a single program. However, due to the rapid rise of multi-cores in recent hardware architectures, a new way of implementing this paradigm was introduced to exploit the resulting parallelism.

The Event-Based Programming Paradigm

The event-based programming paradigm focuses on the flow of a program whose execution is controlled by events such as a mouse click or a keystroke from users, or a message from the operating system. Events cause the execution of trigger functions, which process them. This idea already existed in early command-line environments such as DOS and in embedded systems.

Multi-Paradigms

It is possible to combine paradigms to obtain the advantages of each. However, this also increases the complexity of the program. It is up to the programmer to weigh the advantages and disadvantages to determine if combining paradigms is beneficial.

The goal of a multi-paradigm language is to leverage constructs from different paradigms to make a program that suits the nature of a specific problem. C++, Leda, Common Lisp, and Scala are examples of combining paradigms. C++ started as an extension of the C language. Its original goal was to add object orientation support to C. As it evolved, C++ accumulated more features that can be found in other programming paradigms such as the template from the meta-programming paradigm and the lambda functions from the functional programming paradigm. Common Lisp also supports multiple programming paradigms, incorporating constructs from the symbolic, functional, and imperative programming paradigms, and from the object-oriented programming paradigm through CLOS. For example, to find whether a string contains an upper-case letter, the code below can be written in Java.

String s = "IloveProgramminG"

boolean found = false;

for (int i = 0; i < s.length(); ++i)

{

if (Character.isUpperCase(s.charAt(i)))

{

found = true;

break;

}

}

Since Scala supports both functional and imperative styles, the above code can be written in this program as below.

val found = s.exists(_.isUpperCase);

In the Java example, the imperative style is evident since the found variable is reassigned while executing for the loop, which iterates through the characters in the string by indexing. In the Scala example, the exists method iterates through the collection of characters, and passes each character to the function. If the exists method finds that the string contains an upper-case letter, then it returns true. Otherwise, it returns false. Thus the found variable is never reassigned.

Shifts between Programming Paradigms

The Nature of the Problem

Most problems can be solved by starting from a known state and reaching an unknown state. However, some can be solved more easily in reverse by starting from an unknown state and reaching a known state because of the ability to trace back to facts that are already known. This is called the bottom-up approach, and it is mainly used by the object-oriented programming paradigm. Also, some complex problems are easier to solve when they are decomposed into many simple sub-problems. This is the top-down approach, and it is followed by most structured programming paradigms.

Hardware Design

The Von Neumann architecture considers a single processor handling sequential tasks and has the following properties

- The data and programs are stored in the same memory.

- Memory is separate from the CPU.

- Instructions and data are piped from memory to the CPU.

- The results of operations in the CPU must be moved back to memory.

Thus, in this type of architecture, emphasis is placed on the state changing statements, mostly using reads and assignments, that best fit the procedural language programming paradigm.

However, since the speed of serial processors is no longer increasing, all new processors nowadays are multi-core, and the Von Neumann architecture has become the limiting factor in computer performance. The industry is therefore turning away from it, which has brought about a hybrid parallel or multi-core programming paradigm, Of which MPI and OpenMP are examples.

Efficiency

In the 1950s and 1960s, it was difficult for programmers to understand machine language programs because the instructions were written in a sequence of 0s and 1s. Also, errors were hard to find. To overcome these obstacles, high-level languages such as Basic, FORTRAN, COBOL, and C were developed. These languages had a syntax similar to that of English, better control structures, and mathematical expressions, which improved efficiency dramatically.

Cost =

In the 1980s, the software industry began to face the rising complexity and cost of programming and was unable to meet customer demands. The procedural or structured paradigms currently in vogue produced software that required constant maintenance due to the existence of too many subprograms and global data. This necessitated the development of object-oriented programming, which benefited both end users and the software industry. This type of programming provided ways to communicate among analysts, designers, programmers, and end users, facilitating the development of larger software projects. In addition, objects could be divided among teams and worked on in parallel, which decreased development time tremendously. Since the object-oriented programming paradigm focused on writing modular code, it was easy to develop, maintain, and reuse pieces. These cost-effective features made it the perfect paradigm for the era.

The Evolution of Exception/Error Handling

An exception is an error or event that occurs unexpectedly such as division by zero or being out of memory. Exception handling is a mechanism to deal with these errors when they arise, and greatly enhances program reliability and fault tolerance.

Exception handling was first introduced by the language PL/I in the 1960s and was significantly advanced in CLU in the 1970s. BASIC also provided limited exception handling. In BASIC, programs were written with numbers to indicate each line of code. Execution control was tranfered by jumping to a specific line using a statement called GOTO and exceptions were handled using an On Error Goto statement.

1010 CALL FUNCTION_A 1020 IF NOT ERROCODE = 0 GOTO 2000 1030 CALL FUNCTION_B ... ... 2000 IF ERRORCODE = 1 GOTO 2010 2010 IF ERRORCODE = 2 GOTO

Ada, developed in 1983, is usually considered the first language with well-defined and usable exception handling. Mainstream adoption later came in the 1990s with C++. Exception handling now appears in almost all languages, including Smalltalk, C++, Java and C #. Languages such as PL/I, Ada, Mesa, and CLU provide explicit exception handling mechanisms, while those such as Pascal, C and FORTRAN do not.

In languages without exception handling mechanisms, other methods should be used to indicate and take care of exceptions. The most common method is to have functions return “speical values.” If the value returned by a function indicates that an exception has occurred during the function call, then appropriate action is executed. However, this introduces several problems. First, it leads to programs that are difficult to read. Second, it increases programs’ size and complexity because of the necessary checks. Third, it makes programs inefficient, since the value returned by a function should be checked for an error value every time the function is called, even though the error may not occur at all. For example, C functions often return a special value such as -1 to indicate an error. For example, int readFile(char* fileName) function returns a -1 if it encounters an error or reaches an end of file while reading a text file. Hence, a global variable should be checked every time the function returns to determine whether the -1 is an error value. Besides, status values should always be different from “legitimate” values.

Exception Handling in PL/I

PL/I was developed by the IBM Corporation in the 1960s, although it was not standardized until 1976. The PL/I exception mechanism allowed the user to write handlers for both language-defined and user-defined exceptions. The programmer could specify exceptions using the lines of code below:

DECLARE <name_of_exception> CONDITION; SIGNAL CONDITION(<name_of_exception>);

The binding of exceptions to handlers was dynamic, meaning that it applied to the most recently executed ON statement. Although the design was powerful and flexible, it posed some problems. First, dynamic binding made programs difficult to read and write. Second, continuation rules such as where to return control after built-in or user-defined exceptions occurred were difficult to implement and made programs harder to read, because some built-in exceptions returned control to the statement where the exception was raised, while others caused program termination. Moreover, user-defined exceptions could go to any place in the program.

Exception Handling in CLU

The CLU language was developed by the Computation Structures Group at M.I.T. between 1974 and 1978. A normal return indicates the operation it implements has been successfully completed. However, if such completion proves to be impossible, the routine executes a signal statement instead, which then generates an exception. Handlers are specified by an except statement, which associates a list of exception handlers with a statement. For example, to handle the exceptions raised by calls of power' in a block, one could write:

If the zero - divide exception is raised by an invocation in the block, handler 'h1 will be executed. If underflow or overflow occurs, h2 will be executed. If any other exception is raised, h3 will be executed.

The handlers h1 will themselves usually be statement sequences, and may contain other except statements. However, CLU uses only a single-level termination model of exception handling, meaning it can search only one level up in the local context. Thus, If a programmer wants to raise an exception more than one level up, he/she must raise the same exception in the handler of each level. This exception propagation method is thus called explicit propagation.

Exception Handling in Ada

Exceptions in Ada are bound to the handler when a block that raises an exception includes a handler. For example, an exception that is not handled is raised through the call stack until a handler is found or until the end of program is reached. Ada includes five built-in exceptions: constant, program, numeric, storage, and tasking, though user-defined exceptions can also be declared. In Ada, handlers can be attached to blocks, procedures, or packages. Handlers are placed in the exception clause, which should be positioned at the end of the protected region as below:

begin

protected region of code

exception when Error1 => handler1

when Error2 => handler2

when others => handler3

end;

Ada represented a significant advance over PL/I in terms of exception handling, and it was the only widely used language that included this feature until the introduction of C++ and Java.

Exception Handling in Today’s Programming Languages

Today, all major programming languages have built-in exception handling mechanisms. The design of exception handling in C++ is based in part on that of CLU, Ada, and ML. Java’s is based on that of C++. In modern models, the try, throw, and catch statements usually implement exception handling. First, modern exception handling models usually use dynamic propagation. If the handler for a raised exception cannot be found locally, the program searches up the stack of the try block until a matching handler is found or the default handler is called, which then aborts the program. Second, exceptions can be parameterized and can contain as much information as needed to describe the event, without additional overhead or change to the program’s structure. Moreover, modern models eliminate the use of a global variable to indicate errors, which can be problematic in concurrent programming, when different threads use these variables without synchronization mechanisms. Fourth, modern error handling is based on object-oriented design, which can easily resolve many classical exception handling issues such as creating user-defined exceptions, passing information to handlers, etc.

Improvements in Error/Exception Handling

In the beginning of software design, the standard way of handling errors was to use error codes with some ways of identifying them. The problem with this approach is that, in larger systems, error management can become difficult. Different programmers may use different values for handling errors without documenting them properly, which causes inconsistency. Also, it is not easy for the compiler to check that the code is consistent. Thus, code to manage these kinds of errors can often confuse the original intent of the program, making it difficult to read and to maintain. Moreover, each called function must be checked for the returned value, which can be cumbersome for programmers. Using error codes also imposes difficulties when changing functions. For example, in C, the char function() may be replaced by the int function(). Since the return types are different, the code must be changed as well in order to get the error value.

In languages like BASIC, the user can define the on error goto statement at the beginning of the program, and specify the error handler. Whenever an error is detected, the error handler will be triggered. However, this kind of approach fails to restrict the flow of controls, since it can jump to any part of the program using the goto statement. The greatest advantage to this is that programmers were forced to think about exceptions and how to handle them, which may have led to the discovery of more faults during the design and implementation of new programming languages.

Conclusion

In this paper, we explored the important programming paradigms and error/exception handling processes throughout the history of computer design and discussed them in detail. At first, computers were used for mathematical and scientific calculations. However, as the hardware evolved and problems became more complex, the need arose for a variety of programming languages and paradigms. Functional programming, which is based on the theory of functions, is a simpler and cleaner programming paradigm than the procedural or imperative paradigm that preceded it. The logic paradigm differs from the other three main programming paradigms, and works well when applied in domains that deal with the extraction of knowledge from basic facts and relations. The latest step in programming evolution, the object-oriented programming paradigm uses object models and is a more natural way of solving problems than are former programming languages. Its use of concepts such as abstraction, encapsulation, polymorphism, and inheritance gives it unmatched flexibility and usability. The way programming languages handle errors has also evolved from using simple error codes, through the implementation of dynamic propagation, to treating exceptions like objects. We have also seen that the software industry’s constant demand for better hardware design, greater efficiency, and inexpensiveness has precipitated the shift from one programming paradigm to another. Finding and dealing with exceptions has also been instrumental to the development of new programs, especially to large ones. It is almost inevitable that another paradigm shift will occur in the future to make programming better for both producer and consumer.

References

[1] Seyed H. Roosta, Foundations of programming languages: design and implementation, Thomson/Brooks/Cole, 2002

[2] Doris Appleby, Programming languages: paradigm and practice, McGraw-Hill, 1991

[3] Kenneth C. Louden, Kenneth A. Lambert, Programming Languages: Principles and Practices, Cengage Learning, 2011

[4] B. Liskov. A history of clu. In Proceedings of History of Programming Languages Conference (ACM SIGPLAN Notices, vol. 28, no. 3), pages 133–147, 1993.

[5] D. C. Luckham and W. Polak. Ada exception handling: An axiomatic approach. ACM Transactions on Programming Languages and Systems, 2(2), April 1980.

[6] James Gosling, Frank Yellin, the Java Team, The Java application programming interface, Volumes 1 and 2, Addison-Wesley, 1996.

[7] B. Stroustrup, The C++ Programming Language (Special 3rd Edition), Addison-Wesley Professional, February 2000.

[8] MacLaren, M.D. Exception Handling in PL/I. Technical Report, Digital Equipment Corporation, Maynard, Mass.

[9] Dijkstra, E.W. Goto Statement Considered Harmful. Comun. ACM Vol. 11 Nr. 3

[10] Goodenough, John, Exception Handling: Issues and a Proposed Notation, Communications of the ACM, 18, 1975.

[11] A History of CLU, Barbara Liskov, Laboratory of Computer Science Massachusetts Institute of Techonology, April, 1992.

[12] http://en.wikipedia.org/wiki/History_of_programming_languages

[13] Sammet, Jean E., "Programming Languages: History and Future", Communications of the ACM, of Volume 15, November 7, July 1972

[14] http://en.wikipedia.org/wiki/Scala_(programming_language)