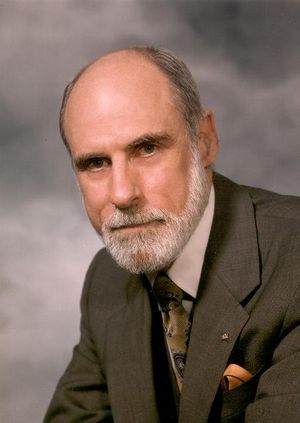

Oral-History:Vinton Cerf

About Vinton Cerf

Cerf received his Bachelors in mathematics from Stanford (1965); worked at IBM (1965-67); went to UCLA for graduate school (PhD 1972); taught at Stanford (19772-76); worked at ARPA (1976-1982); worked at MCI (1982-1986); worked at the Corporation for National Research Initiatives (CNRI) (1986-93); and then returned to MCI (1993). While a graduate student at UCLA, he worked under Gerald Estrin, and with Leonard Kleinrock, on the set up of ARPANET: Cerf worked on the protocols for ARPANET, and was the lead developer of the host software. At Stanford Cerf, with Bob Kahn, developed the Transmission Controlled Protocol (TCP) in 1973-74, the basic design for Internet and TCP protocols. These protocols were successfully implemented 1 January 1983, at which point Cerf went to MCI to help develop MCI Mail, a “digital post office.” In 1988 he worked to attach commercial email providers to the Internet, to provide a self-supporting commercial engine for Internet growth. At the CNRI he worked to have the Internet Society take over the funding of standardizing Internet protocols, and other basic support functions, from the government. Returning to MCI, he has worked to get MCI into the Internet business. He has also helped design an Interplanetary Internet as part of the Mars Mission effort. Cerf also explains much of the history of the Internet; describes some of its politics; defines key terms; and describes its structure. He explains the function of the Internet Society, describes his (minimal) activity in IEEE, and speculates on the growth and impact of the Internet on the economy, society, and the culture.

See Robert Kahn Oral History and Robert Metcalfe Oral History for further discussion of the development of ARPANET and of the Internet.

About the Interview

Dr. Vinton Cerf: An Interview Conducted by David Hochfelder, IEEE History Center, 17 May 1999

Interview #355 For the IEEE History Center, The Institute of Electrical and Electronics Engineers, Inc.

Copyright Statement

This manuscript is being made available for research purposes only. All literary rights in the manuscript, including the right to publish, are reserved to the IEEE History Center. No part of the manuscript may be quoted for publication without the written permission of the Director of IEEE History Center.

Request for permission to quote for publication should be addressed to the IEEE History Center Oral History Program, IEEE History Center, 445 Hoes Lane, Piscataway, NJ 08854 USA or ieee-history@ieee.org. It should include identification of the specific passages to be quoted, anticipated use of the passages, and identification of the user.

It is recommended that this oral history be cited as follows:

Dr. Vinton Cerf, an oral history conducted 17 May 1999 by David Hochfelder, IEEE History Center, Piscataway, NJ, USA

Interview

INTERVIEWER: David Hochfelder

DATE: 17 May 1999

PLACE: MCI Worldcom, Reston, Virginia

Education and early employment

Hochfelder:

I would like to first get a sense of your career and your involvement with the evolution of the Internet. I guess we could start by talking about your early career, maybe your graduate work at UCLA, and your first jobs out of grad school.

Cerf:

It might be worth going back just a little bit before UCLA. My undergraduate work at Stanford was in mathematics, but I also was strongly attracted to computers at the time. I took all of the computer science courses I could take at Stanford. After I graduated in 1965, I decided that I wanted to work in this area as opposed to being a research mathematician or going onto more graduate work in mathematics. So, I got a job with IBM in Los Angeles.

Originally, I was expecting to be shipped off to Los Alamos to help install the first 360/91 in use there. This was in either June or July of 1965, after I had graduated. It turned out that, for reasons I do not understand and never found out, it was not ready to be installed. Consequently, they reallocated me to a Los Angeles data center in downtown L.A. that was operating a service called QUIKTRAN. QUIKTRAN was an interactive FORTRAN programming system. You actually rented the IBM 1052 terminal and you made a dial-up call on a 300 bit per second modem. Perhaps it was even less than that, a 110 baud at the time.

You would type in your programs and run them on a timesharing system that was built in the early 1960s. It ran on an IBM 7044, which is a 36-bit machine. Sort of a predecessor related to the 7090 model, which was faster. So, my introduction to commercial computing, in some sense, was through a time sharing system.

After two years, I realized that I didn’t have enough training and background in operating systems to really feel comfortable that I knew what I was doing, even though I was maintaining the system and making changes to it by either adding features or fixing bugs. So, after a couple of years at IBM, around 1967, I decided to go back to graduate school. By a stroke of good fortune, my best friend in high school, a guy named Steve Crocker, happened to be at UCLA doing his graduate work. He introduced me to the man who became my thesis advisor, a guy named Gerald Estrin. Somewhere around March of ’67 or so, I concluded I needed to go to graduate school.

Then another stroke of good fortune, I met another guy named Leonard Kleinrock, at what was then in a part of UCLA’s electrical engineering department that later became their computer science department. Kleinrock had, in his graduate days at MIT, invented the idea of packet switching. Now there are other people who had come up with this idea as well, coming at it from different directions. Paul Baran came up with this idea around 1962 when he was working at Rand, but he was applying it to packet mode voice, for military command and control, and it was a highly failure-resistant recoverable system. Kleinrock was looking at it from the standpoint of packet mode communications, looking at stochastic message switching [phone] systems. Another guy at the National Physical Laboratory in England (his name was Donald W. Davies, who incidentally worked at Bletchley Park on the breaking of the Enigma codes during World War II) was experimenting with what he called “packet” switching. This is again a computer communications technique that breaks communications down into blocks of information which have addresses on them indicating where they are going, which is very similar to a postcard except it was electronic.

Three guys had been involved in all this. Of course, I did not know any of that at the time I was coming to UCLA. But leaping forward a little bit, it turned out that the work that those three gentlemen did in pioneering the concept of packet switching was fundamental to the evolution of both the ARPANET and it is related other packet switching applications, and of course ultimately the Internet. Consequently, I went to work first for Professor Gerald Estrin, who remained my thesis advisor for my five-year stay at UCLA. I also worked as a programmer for Gerry Estrin for a time.

ARPANET

Cerf:

- Audio File

- MP3 Audio

(Cerf_-_clip_1.mp3)

When that project ended, Leonard Kleinrock made a proposition to the Defense Advanced Research Projects Agency (ARPA) to run what was called the Network Measurement Center for the brand new idea called ARPANET. ARPANET was a packet-switched, area communication system based on what at the time were considered broad band telecommunication links. In this case, it referred to 50 kilobit per second connections, which is of course what you get today with a dial-up modem. But back then, however, it required a unit about half the size of a refrigerator and used twelve telephone lines lashed together.

Kleinrock was very much involved in Larry Roberts’ decision to pursue packet-switched communications as the basis for what was called the ARPANET. Larry Roberts had been at Lincoln Laboratories and he had been doing experiments with Tom Marill linking the TX2 machine at MIT Lincoln Labs to the Q32 machine at System Development Corporation in Santa Monica, California. This was around 1966, which is a little bit before I actually got to UCLA. They demonstrated over rather low-bandwidth connection that packet-switching might work between two computers with dissimilar operating systems.

In the summer of 1968, Bob Taylor, who was then the Information Processing Techniques Office (IPTO) director, proposed to the then ARPA director that a network should be built that would connect the various computers that ARPA was paying for at different university campuses. From a single terminal, you could get access to all of them instead of having separate distinct terminals to connect to each computer independently. It was Taylor who managed to, and how do I say this gently, extract Larry Roberts from Lincoln Laboratories up in Boston area and reposition him at ARPA to run the ARPANET project. He did this by bringing some pressure to bear on the director of Lincoln Laboratories, through the then head of ARPA Charles Herzfeld.

In any event, Larry Roberts came down to ARPA to run this project, and they issued an RFP around mid 1968. It went to quite a number of companies around the U.S. I remember consulting as a graduate student with my friend Steve Crocker for a company called Jacobi Systems that subsequently bid on the ARPANET project. It developed a time-sharing system service on some Interdata machines, model three and five, and they were connected to a big Univac 1108 for batch-type processing. As a result, you did interactive work and then you submitted a batch job. This is all part of what we called MINITS, for mini time-sharing system. Crocker, I, and others worked on this product. When the ARPANET RFP was released, Jacobi Systems decided to make a bid. Jacobi Systems submitted a proposal, and it made some progress but was eventually overtaken by someone with a better proposal then ours.

Anyway, Jacobi Systems went out of business eventually, but Crocker and I were very fortunate because when Kleinrock had proposed to develop his Network Measurement Center, ARPA funded him. We wound up working for Len Kleinrock as graduate students in his network measurement center. Some other legendary names from the Internet world were part of that project. One of them was Jonathan Postal, who has now passed away, and Robert Braden, who is still going strong. We first worked on a time-sharing system so that we would have a machine to use as the basis for building a network measuring system. From Xerox Data Systems, originally called Scientific Data Systems, which was bought by Xerox, we received a Sigma 7 computer. It was one of the first computers to come off their production line. It was like a commercialized Digital Equipment Corporation 940 machine, which itself ultimately turned into the PDP-10 after a lot of work.

We designed and built an operating system for it which we called the Sigma Experimental Time-sharing System, or SEX for short. Of course, the SEX users manual was a very popular document around the lab. Crocker became the head of the graduate student team that developed the ARPANET protocols. This is called a Network Working Group. I had two jobs. For Len Kleinrock, I was the principal programmer on the Network Measurement Centers. So, I developed all of the host software that was used to actually exercise the ARPANET from UCLA. By this I mean to inject traffic into it, to measure the results that came back, to try to break it by pumping more traffic than it could handle—things like that. I also participated with the Network Working Group in the development of the ARPANET protocols, the host-to-host protocols and the higher-level protocols like Telnet for remote access and File Transfer Protocol (FTP) and email. So, I got a dose of both halves of this thing.

It was because of my work at the Network Measurement Center that I met Bob Kahn. Now we have to understand what we are about to enter in this picture I have created. Bob had been teaching at MIT after getting his Ph.D. from Princeton, and was persuaded by John Wozencraft that he should spend some time in industry. He went to Bolt, Beranek and Newman, or BBN for short. Bolt Beranek and Newman was the company that won the bid to develop the ARPANET. Bob Kahn was a major contributor to the actual proposal that had been put together. He was one of the principal architects of the system. After BBN won the contract, which was around December of ’68, they worked like demons for nine months. The team included Bob Kahn and Frank Heart, who was (sort of) the project manager for this effort. Bob was one of the principal architects, but there were at least dozen other people.

They got this thing done in September of 1969, which was almost 30 years ago. Steve Crocker had been hoping that that they would be sort of delayed in delivery, that Labor Day Weekend was coming up, and our software wasn’t quite ready, frankly, to accept this IMP, as the package systems of the ARPANET were called. IMP stands for interface message processor. So they air shipped the thing, and it arrived two days earlier than we expected it. It showed up and we all scrambled to get the software and the hardware finished. A guy named Mike Wingfield built the first interface between the Sigma 7 computer and the IMP, the ARPANET package switch. I remember it was based on a report that Bob Kahn had also authored called “BBN 1822.”

- Audio File

- MP3 Audio

(Cerf_-_clip_2.mp3)

So anyhow, to make a long story longer, around, I don’t know, late in the year 1969, or perhaps early in 1970, Bob Kahn came out with another of his colleagues, David Walden, to actually try the network out and see what happened. By this time we had a four-way network. It was the connection of UCLA, SRI International--which at that time was probably so-called as Stanford Research Institute, the third site was UC Santa Barbara, and the fourth site was the University of Utah. Bob came out and I met him for the first time. He wanted me to do a whole series of traffic tests on the net. What he wanted to do was to create conditions in which the network would lock up, because he believed that the design and the algorithms that were used in fact were vulnerable to certain lock-up conditions. But nobody at BBN believed him, or they thought the probability was so low that it would never happen. And so Bob’s purpose in part was not only to characterize the performance of the net, but to actually force it into some situation which showed that they had to do additional work on the algorithms. Lo and behold, we knocked the net down several times by locking it up in different ways. Over this period of a couple of weeks, Bob and I worked together quite intensely. He would come in with a set of requirements, and I would program something over night. Then we’d run a new set of tests.

So we got to know each other pretty well, and not too long after that, a couple years or so, Larry Roberts asked Bob Kahn to demonstrate the ARPANET by having a public show in connection with something called the International Conference on Computer Communications (ICCC). This first conference was held in Washington, D.C., and we installed, or BBN installed a terminal interface processor, sort of an augmented IMP, in the basement of the Washington Hilton Hotel. It had two 50 kilobit circuits going into it, and then about 60 terminals of all different varieties. The whole idea here was to show that you could make the ARPANET work with almost any kind of terminal equipment. So it took us almost a year to prepare for this thing. Some names that you will also recognize were very much involved in this. Bob Metcalfe for example, the guy that invented Ethernet, was responsible for writing the users manual, a 30-page manual that described step by step to someone who had just walked in and sat down at a terminal what to do to in order to get connected to and run a service at UCLA, or at MIT, or Harvard, or what have you. We spent a year preparing for this, and had a week’s worth of demonstrations at this ICCC scene. That turned out to have been a very important meeting, because it was very international.

- Audio File

- MP3 Audio

(Cerf_-_clip_3.mp3)

The notion of packet switching had been kicking around by this time for a while—it’s 1972, ARPANET’s been around and now publicly demonstrated after three years of implementation. And so many people from outside the United States came to this ICCC meeting, and we organized the sort of a . . . shall we call it a meeting, about 25 or 30 people, many of whom had come from Europe, for example, and had been very much involved in packet switching. Peter Kirstein was at University College, London, participated, later to become very instrumental in the UK involvement in Arpanet and packet switching and Internet. Louis Pouzin, who was one of the pioneers of packet switching in France, was there along with several of his colleagues. Roger Scantlebury was there from Donald Davies’ lab. I honestly don’t remember whether Donald Davies made it or not.

In the course of this meeting, where we were talking about designing or building an international network working group to pursue packet switching concepts, Steve Crocker, who clearly had led the development of the ARPA Net protocols, was the obvious candidate to run this group, except he was going to ARPA. Having not even finished his graduate degree yet, he was just going to take leave and go work at ARPA for a while. Well, I was on my way up to Stanford University, having finished my Ph.D. in March of ’72, and starting at Stanford in the fall. And so somehow or another I wound up being asked to lead this international network working group. I guess they assumed that assistant professors had an infinite amount of time to do these things. So I went to Stanford at the same time that Bob Kahn left BB&N and went to ARPA to run packet programs.

- Audio File

- MP3 Audio

(Cerf_-_clip_4.mp3)

And Larry Roberts and Barry Wessler left ARPA to go to work at BB&N, where they initiated what became Telenet, which was acquired from BBN from GTE sometime back. Is that right? Yes, that’s right. So, I mean, there’s an incredible shifting around of roles in everything.

Meanwhile, I’m at Stanford, and it’s now late ’72 or early ’73. Bob Kahn is now at ARPA, and begins to create and can continue work on programs that Larry Roberts had started when he was still at ARPA. In particular, packet radio, which is a mobile data communication system based on packet switching, but it used mobile radios, ground mobile radios, and packet satellite, which again used a shared radio channel among many ground stations, all of them competing for access to a common resource on a satellite. These two shared medium networks became the stalking horses for the design of the Internet, because they functioned so differently from the ARPANET that when we asked ourselves how do we hook them together, we had quite a challenge on our hands. The packet sizes weren’t the same. The data rates were different. The addressing structures were different. And yet we wanted to assure that the military would have the ability to deploy computers anywhere it needed them, and communicate uniformly across this multiplicity of different packet switched networks.

That became known, at least to Bob and me, as the Internet problem, as we were trying to connect nets to each other. So in ’73, spring of ’73, Bob Kahn came out to Stanford University to tell me about what was going on. He had a packet radio program underway at SRI and was asking himself, “How do I hook these things together?” So he and I worked on that problem together during 1973. In September we issued a paper to the International Network Working Group. It was in INWG Document 39. That paper was briefed in September of ’73 at the University of Sussex, where the INWG had scheduled a meeting in conjunction with another five day conference on computer communication that the University of Sussex was sponsoring. Bob and I presented the basic idea behind what is now called TCP, Transmission Controlled Protocol, at that meeting in September of ’73.

Transmission Controlled Protocol (TCP)

Cerf:

Then we went back home and holed up in one of the hotels in the Palo Alto area. We essentially wrote the paper, which was published in May of 1974 in IEEE Communications as the seminal paper on the design the Internet and its TCP protocols. That all happened almost exactly 25 years ago. Subsequent to the publication of the paper, I accepted a research grant from DARPA to carry out the detailed design of the protocols. As a result, during 1974, I had a seminar with a collection of really smart graduate students working on the design in detail of TCP.

What was of course ironic is that in this 1973 time period, Bob Metcalfe was busy inventing Ethernet over at Xerox PARC, and that was about two miles away from my office. People from Xerox PARC would come to the seminar and we would have debates and discussions. They were working on something called PUP, or PARC Universal Packet, on top of which they ultimately put what’s called the Xerox Networking System, or XNS. We didn’t actually agree on all of the design choices, and so they went a somewhat different way from the TCP/IP path. In the long run, however, they chose to keep their Xerox protocol proprietary, whereas Bob Kahn and I felt that we really needed TCP to be a very easily accessible design so that anyone could build an instance of TCP. We went through some four iterations of the TCP design, and in the last iterations, we split IP out from the TCP protocol.

Hochfelder:

Yes and this was Bob Kahn, not Bob Metcalfe?

Cerf:

Yes. Actually, by this time, Bob Kahn was not as much involved in the details at all. It was the group at Stanford that did the initial detailed design of TCP. I mean they did the detailed design where we got down to the specifics of state diagrams and everything else, such as packet formats. We published that in December of ’74. Then implementation work began, and we got involved with Bolt Beranek and Newman and University College London to collaborate on the testing of the protocols. So, we started the testing work in 1975, after having completed the first specification. We almost instantly discovered we needed to make changes in the design. We went through several iterations.

Another person whose name is ironically coupled through all this is Judy Estrin, who is now the Chief Technology Officer at Cisco Systems. Judy was a graduate student at Stanford at the time we were doing this work. Consequently, during 1975, she would come in and do tests at three in the morning, because there was a nine-hour time difference between the West Coast of the U.S. and University College London. In order to get at least a half day overlap, she had to come in very early to do the tests. The other irony of course is that her father Jerry Estrin was my thesis advisor at UCLA. So everything is connected to everything, which is a good sign of networking, I guess.

Hochfelder:

Right.

Cerf:

- Audio File

- MP3 Audio

(Cerf_-_clip_5.mp3)

This takes you up to the point where around 1978, when we standardized on version 4 TCP protocol, which is what you are using today. It took us another five years to complete implementations of the now standardized TCP/IP protocols on about 30 different operating systems. Then required that everyone on January 1, 1983 flip over from the existing ARPANET protocols to the new Internet protocols. There was a lot of screaming and yelling and protests.

In the year preceding all of this, we had tremendous help from USC Information Sciences Institute, and from Dan Lynch, who was the computer center director there at the time. He sort of helped to manage the whole transition process of going from the old protocols to the new ones. Not everybody made it on January 1st, and so exceptions were granted and people were allowed to function with the old protocols for a period of time. But I would guess that by June, we had either gotten everybody converted, or they had given up on their machine and sort of fallen off the net.

I had come to ARPA in 1976, after having said “no” the preceding year. I had concluded that maybe it would be more effective for me to be at ARPA and have resources to spend on further development of Internet. I was persuaded to come back here, now twenty-three years ago. I stayed at ARPA and managed the Internet program and the packet communications technology program, which included packet radio and packet satellite, as well as the packet security program, which involved the development of packet mode cryptography.

Employment at MCI

Cerf:

I did that work until fairly late in 1982, at which point I started doing arithmetic on the cost of my son’s college education, and realized that I wasn’t likely to be able to pay for this if I stayed on a government salary. I’d been at ARPA at that time for six years. Consequently, I was persuaded by a guy named Robert Harcharik (H-A-R-C-H-A-R-I-K, a Polish name), who was then at MCI, that I might like to come and work at MCI. Another little irony here is that the year before, it’s 1981, I’d gotten an invitation from a guy named Phil Nyborg. Phil used to run the Washington Office of AFIPS, the American Federation of Information Processing Societies, had gone to work for MCI and was a special assistant to the then chairman of the board, Bill McGowan the founder—one of the founders of MCI. Phil called and said that McGowan and guy named Orville Wright—not the one that did the airplane, but the one that was vice chairman of MCI, wanted to meet me because they were thinking they might need a chief scientist.

I went over there in 1981, and we had a four-hour conversation. At the end of the talk, we all concluded they did not need a chief scientist. They were not into the research side of R&D. Their strategy was to dangle quarter of billion dollar checks in front of people saying, “If you’ll build it this way, we’ll buy a quarter of a billion dollars worth of it.” This was remarkably motivating.

As a result, in 1981, I concluded that Bill and Orville didn’t need my services and I continued at ARPA.

- Audio File

- MP3 Audio

(Cerf_-_clip_6.mp3)

But then Harcharik came along around October of ’82, or a little bit before that, and told me about a project that he had in mind called the digital post office. That fascinated me. We had made tremendous use of email within the Internet community, and so I was quite fascinated with this idea of building a digital post office. I agreed to come and work for Bob Harcharik at MCI starting around October of ’82. I actually left the ARPA agency and the Internet Project in late ’82, just as they were about to transition to the new protocols. They were on their way, and there wasn’t much more I could do. I’d done my part.

As a result, I simply left the Internet to others. David Clarke served so well in many capacities, and was named the Internet architect as I departed. Jonathan Postal got named the deputy architect. As a result, I joined MCI in October of ’82 to build what is now called MCI Mail. We launched that product in September 27th, 1983, and continued to develop it for some years after that.

About four years into that program, I realized that the company had essentially done everything that it needed to do with MCI Mail. It had a telex interface. You could send and receive telex messages on it. It had a print interface. You could send and receive to postal addresses using the MCI mail system. You just typed in a postal address electronically, and the system figured out where it was going, routed it to a place where it was printed, stuck in an envelope, and mailed it. Or you could even do an overnight. Then later fax was added, although at the time there wasn’t a whole lot of faxing going on.

I was pretty proud of this work, because it took real signatures. You could digitize them, and they actually signed a piece of paper that would be right in email message and then it would be printed and stuck in an envelope and sent overnight or by postal service. This was done in addition to being able to send and receive ordinarily email, send and receive telexes. That system has persisted to this day.

Corporation for National Research Initiatives and a self-supporting Internet

Cerf:

Bob Kahn persuaded me in 1986, who had just the year before left ARPA after 13 years, to start a company called the Corporation for National Research Initiatives. He asked, “Are you interested in going back into research from industry?” I thought long and hard about that and decided that maybe this would be a good thing to do, because I basically used up all my rain barrel of ideas from ARPA days building the MCI mail service. As a result, I accepted to return to research with Bob Kahn and served eight years as vice president of CNRI, here in Reston. It was there that Bob and I spent so much time thinking and working on things like information infrastructure, which was not a buzzword at the time. We also worked on designing and developing digital libraries, working on knowledge robots.

- Audio File

- MP3 Audio

(Cerf_-_clip_7.mp3)

Interestingly enough, while I was there, around 1988, I realized that the Internet would not likely get very big if it didn’t become a self-supporting system. It had to become a commercial engine, as opposed to simply being something that the government bought and paid for. The problem was that the Internet at the time was strictly for use by research and education organizations. Government and appropriate use policy had strong restrictions on it. By 1988, the National Science Foundation had already launched its NSF Net backbone program. So, we had a growing network, but it was limited in its scope. I had gone to an Interop show, which was started by a guy named Dan Lynch, whose name I mentioned earlier.

Dan started this thing in 1986 as a kind of a tutorial affair. But it became an exhibition and grew to 50,000 or more people coming, which he then later sold to Ziff Davis, which later sold it to Masayoshi Son, [that company Bell South named]. Anyway, the reason I bring this up is that it was at an Internet show in 1988 that I realized that this network was potentially going to be a big deal. This is only 15 years into the program from my perspective. The reason I came to that conclusion was that I walked into the show and saw some enormous displays. I happened to be with Eric Benhamou at the time, who is now the CEO of 3Com. I asked Eric, “How much do these displays cost?” These huge booths and everything and people manning them, they could run a quarter of a million to half a million dollars. I remember thinking, “Oh my God, industry is putting real money into this. Maybe this is real.” I then thought we’ve got to do something, because it can’t be commercialized unless we get the government to change its policy.

As a relatively new employee of CNRI, a couple of years into working there, I went to the Federal Networking Council and asked them for permission to hook up MCI Mail, which was a commercial email package, to the Internet. My purpose was twofold. I wanted to see what would happen if we did that, because the two systems were not necessarily compatible. Secondly, I wanted to break the policy logjam that said you couldn’t put anything commercial on the Internet, because I figured until we did that, we’d never be able to turn it into a self-supporting system and it would never spread.

Much to my astonishment, they said yes, and at CNRI, David Ely, who was principal programmer at the time there, developed an implementation of a gateway that would link the MCI Mail system to the Internet and vice versa. We rolled that out mid year 1989. Of course, as soon as we announced it, every other commercial email service provider insisted on having a connection to the Internet, because they didn’t want to give MCI a competitive edge on everybody else.

The result of my action was twofold. One, an awful lot of email services that didn’t use to inter-work now could inter-work through the Internet. Secondly, we broke the policy logjam that prevented commercial services from being on the net. Now there isn’t any such restriction.

Not too many years after that, while I was still at CNRI, we began to realize that the government funding for such things as the Internet standards making might actually go away after a while, because the government might conclude that Industry should be paying for this. As a result, in 1991, a small group of us concluded that we should start something, an organization that would ultimately take responsibility for funding the secretariat for standardizing protocols on the Internet. The organization that was proposed is now called the Internet Society.

The Internet Society, return to MCI

Cerf:

We announced in June of ’91 that we would create such a thing. I served as its first president from ’92 to ’95. In the course of that period of time, I experienced another career transition. Harcharik, who had led the MCI Mail project, retired in 1986, about the same time I joined CNRI. He was persuaded to come back to the company in 1993 to get MCI into the data business, specifically into the Internet business as well as frame relay. He called me up and asked me if I had any interest in returning to MCI. I struggled with that for some weeks, because I was having a very good time in the R and D business. But eventually I realized that I might be able to find a little more time working at MCI to serve as president of the Internet Society. I accepted Harcharik’s offer.

Interestingly enough, this is the second time in my career that Bob Harcharik had extracted me from a Bob Kahn operation. The two of them were old adversaries for my attention, but they are good friends. They play golf together. But they had been at opposite poles of where I was going to be employed twice in their careers. Thereafter, I returned to MCI in 1994. At MCI, my job was to get MCI into the Internet business.

Let me go way back to 1979 for a moment. I’m still at ARPA, and it becomes apparent that we need a team of people, who are deeply aware of what’s happening to the Internet. As a result, I put a little kitchen cabinet together called the Internet Configuration Control Board. We wanted it to sound as boring as possible so that no one would particularly press to be on it. But I included in that group David Clarke, who’s been one of the key contributors to the design of the Internet, or it’s implementation, John Postal and Bob Braden, Steve Kent, who is a major source of expertise in security at BBN.

I think David Mills, who has gone from Linkabit, a commercial concern, to University of Delaware [and University of Pennsylvania], was very interested in the architecture of these kinds of things. The original Fuzz Ball Software, running on a Digital Equipment Corporation PDP=11, was turned into the first NSFNET Router Implementation. This is before it was out-sourced again to a triumvirate of MCI, IBM, and Merit. Merit is a network team at the University of Michigan that had been running university networks in the state of Michigan. The three organizations took on the job of building the NSFNET homebone.

Anyway, I bring up the ICCB simply because shortly after I left ARPA, a guy named Barry picked up the responsibility for the tasks I had been doing, and restructured this ICCB into the Internet Activities Board (IAB). Each one of the members of the board was, in fact, the head of a working group or task force, and there were a bunch of different task forces. One of them was called the IRTF for Internet Research Task Force, and another one was called the IETF or the Engineering Task Force. There were a bunch of others that were specific to topics. Barry Leiner ran that IAB operation. Then in 1992, we had the very first official meeting of the Internet Society, it was an annual meeting called INET, in Tokyo, Japan. In that meeting, it was proposed and it was accepted that the Internet Activities Board become part of and operate under the auspices of the Internet Society.

Somewhere along the line, I guess after I returned to CNRI, I became a member of the Internet Activities Board and eventually served as its chair for three years from 1989 to 1992.

- Audio File

- MP3 Audio

(Cerf_-_clip_8.mp3)

By 1992, although I was no longer a member of IAB, I had become president of the Internet Society. We were concerned that the Internet Activities Board should have some place to call home, because none of the government agencies were really anymore interested in pursuing this activity as deeply as they had. The Internet Society offered to house what became the Internet Architecture Board. This did come to pass. Of course, since then, the IAB and the IRTF, (research task force), and now the IETF, (the engineering task force), they’re all functioning under the auspices of Internet Society. That was accompanied by a considerable amount of Sturm und Drang at the time.

It’s probably not worth detailing here, but the Internet has certainly had its share of politics, as you may well imagine. Particularly as it became more economically important. Politics seems to go up as a function of the economic value of things. I knew Internet was going to get big when the attorneys started getting interested in it.

That almost takes us up to the present. The only other thing that I would add to this narrative is that after MCI launched its Internet Services in 1994, we also built something called the Very High Performance Backbone Network Service or vBNS for NSF (the National Science Foundation), for specific use by the research and education community, not public use. Then later, in 1997, late ’97, I began to wonder about what things we should be doing now to prepare for the needs of the network 20 years from now.

Application of Internet Protocols to space exploration

Cerf:

It was in the course of thinking about that that I concluded that we should be working on an inter-planetary Internet design. I started to talk about that in late ’97, and one of the engineers here at MCI who had formally worked at the Jet Propulsion Laboratory, put me in touch with his former colleagues. It turns out that they had been experimenting with the idea of using Internet protocols in space. We got together, and the synergy was very powerful.

Not very long thereafter, a few months of work went by. We briefed many people with NASA, and as a result the administrator Dan Goldin approved the Inter-planetary Internet Project as part of the Mars Mission Effort. In this project every 26 months a new piece of mission equipment will be launched and either put into orbit or placed on the surface of Mars. We are expecting now to have satellites in orbit around Mars forming a piece of the Inter-planetary Internet by 2008. Our objective in all of this is to build up an infrastructure that can be used by subsequent missions for communications command and control.

Typically, in the past, the missions had been launched with their own organic communications capability, but didn’t have inter-working capability with anything else. Consequently, there’s been a slow history in development of the standards for space communications. The CCSDS link protocol has been standardized by the Consultative Committee on Space Data Communications, which is a channel-based protocol. We are going to take the CCSDS design and then use it to carry embedded Internet packets from planet to planet. My surprise is, frankly, that the project is moving along as quickly as it is. To think that we will have a two-planet Internet by 2008 is really quite stunning. That takes us pretty much to the present.

Packet Switching, TCP

Hochfelder:

Yes, sounds like a very fascinating and varied career. If I could stop and back up a little bit, there are some technical terms you mentioned that I’d like to clarify. First, what is packet switching?

Cerf:

- Audio File

- MP3 Audio

(Cerf_-_clip_9.mp3)

Let’s explain about the circuit switching telephone network. The most illustrative description of these things might be to compare circuit switching with dedicating a piece of highway from Los Angeles to New York while you’re riding your bicycle on it, and no one else is allowed on the bicycle path until you get to New York. At which point, then you get off and somebody else can get on. It’s very inefficient use of the available capacity. Packet switching is more like what you see on the road today, where you have a big wide freeway, and you have lots and lots of cars all sharing this common highway. As long as we don’t bump into each other, you actually get much better use of the capacity of the highway. So, packet switching is basically like multiple cars using the same facilities one right after the other.

Another analogy for packet switching that I find quite appealing is to imagine postcards, because a postcard bears a lot of resemblance to an electronic packet. They both have a finite size, and just like a postcard, an electronic packet has a source and destination address. But there is no guarantee that when you put a packet into the system that it will come out, just like when you put a postcard into the postal service, there’s no guarantee that it comes out. If you put two postcards into the postal service, they don’t necessarily come out in the same order you put them in. This is also true of Internet packets. Everything we know about postcards applies to Internet packets, except the Internet packets run a hundred million times faster than a postcard. Of course, that doesn’t make it reliable at all. Consequently, in order to make the electronic postcard notion work reliably you have to layer on top of that additional procedures, protocols, and standards between the source and destination.

For Internet purposes, we invented TCP, which is Transmission Control Protocol, which does in fact provide reliability in sequencing and flow control. You don’t necessarily get this from the underlying, basic Internet packets. The community of Internet researchers is building up layer after layer of protocol and application structure in order to take advantage of the commonality of support below, say at the packet level. Then as each protocol gets layered on top, it takes advantage of whatever the underlying layer is capable of supporting.

Hochfelder:

Yes, let me see if I have this straight. The whole concept of packet switching goes back to the early ‘60s?

Cerf:

Yes it does. It wasn’t necessarily always called that, but if you wanted to push the analogy farther, you can because if you go to teletypes, for example, punch paper tape usually conveyed a finite length message. The way you used to switch through the system would be to punch out the message, transmit it to another teletype, which punched out a copy of the message. And then you hung it on a peg somewhere. Finally, somebody who could get the message further on in the network would take it off the peg and run it through the teletype machine, which would send it to the next teletype machine. That was store and forward message switching. Packet switching uses much smaller sized chunks, and is intended to operate at higher speeds and be more responsive than typical message store and forward.

Hochfelder:

Yes. And Transmission Control Protocol was a method designed to handle the routing of these packets.

Cerf:

Actually it wasn’t. The routing was a separate activity.

- Audio File

- MP3 Audio

(Cerf_-_clip_10.mp3)

All the TCP did was to make a reliable byte stream out of an unreliable and non-sequenced packet stream. If you want to think about the way TCP works, imagine that you’re sending a novel to your friend, but the only thing you can do is send in postcards. As a result, you chop up the pages of the novel so they’d fit on the postcard.

When sending it you might think that the postcards would get out of order, so you’d better number all of them so your friend could put them back together in the right order. Then you’d remember some of them could get lost, so you’d keep copies in case you have to retransmit them. After all of this you’d send a batch, and then you’d say, “Well how do I know when to send copies?” And the answer is, “Ah-ah, I’ll get my friend to send me a postcard every once in a while to say I got everything up to say, postcard number 126.” If you have already sent subsequent postcards, receipt of this postcard would mean that you should start re-transmitting postcard number 127, 128, and 129. If you hear nothing for a while, then you have to start re-transmitting any unacknowledged postcards.

The only other thing to worry about is that if, by some miracle, you took 2,000 postcards that made up a 1,000 page book and you mailed them all, they might all be delivered on the same day. The issue then is whether your friend’s postbox might is too small to hold them. In which case, things would fall on the floor and get lost. Then you have to retransmit. So, you might have a protocol that says you won’t send more than 100 postcards at a time until you get an acknowledgement back from your friend. That’s a typical positive acknowledgement scheme.

Origins of the term "Internet," RFC documentation of early Internet

Hochfelder:

Let me see if I understand the origin of the term “Internet.” Basically, it was an attempt to link three different networks together?

Cerf:

- Audio File

- MP3 Audio

(Cerf_-_clip_11.mp3)

It started out as an attempt to link Packet Radio Network, the Packet Satellite Network, and the ARPANET together. We called it Internetting and the short name for the ensemble was Internet. I don’t think the term “Internet” actually showed up until about 1976 or there about.

Hochfelder:

The last term you used that I’m curious about is “knowledge robot.” Can you explain what you mean when you use that term?

Cerf:

What is RFC? When Steve Crocker was running the Network Working Group to develop the ARPANET protocols, he was a graduate student. We all knew we needed to communicate with each other, because ARPA expected the graduate students to develop the software and make the network useful. Yet, we sort of thought that eventually some professional management would show up to tell us what to do.

Steve Crocker came up with the idea of calling these notes “request for comment,” which seemed very innocuous and didn’t appear to be dictating anything to anybody. As a result, that got abbreviated RFC. RFC1 came out in something like April 1969, about 30 years ago. They have become, in effect, the memory of the project. They’re the place where all the standards from the Internet Engineering Task Force get published. There still continues to be published in the RFC series papers of a more general nature and a few of their earlier on works, even whimsical fiction pieces and occasionally poetry. As a result, it is a very general series, but its intent in large measure is to make sure that the participants in the evolution of what is now Internet will have a place to turn to get documentation about what protocols look like now and what the standards are.

Hochfelder:

What about the term “knowledge robot?”

Cerf:

Bob Kahn and I, when we were talking about the formation of CNRI, very quickly got into a conversation about knowledge robots. Eventually, we shortened that to “knowbots” as just a summary of a knowledgeable piece of mobile software.

Hochfelder:

Are those in existence now? Or is this something you are projecting in the future?

Cerf:

We designed an architecture for knowbots. We actually implemented a prototype. I’ve been away from CNRI, so I don’t know how much farther they’ve gone. But similar things are happening like JAVA applets and ActiveX software for Microsoft are all examples of software that is capable of moving around in the network and performing different functions, terminating after results are done or sending results to some third party. Consequently, you will see JAVA applets behaving in a way rather similarly to our concept of robots.

Politics and the Internet

Hochfelder:

Earlier on, you mentioned something about the politics of the Internet. I know that’s probably a very open ended question, but I’m wondering if you could go over that point?

Cerf:

Yes, that is very complicated question. In the early stages, of course, even in the predecessor, ARPANET, people thought we were crazy, because packet switching didn’t make a whole lot of sense to them. I think Internet was almost as difficult to sell to ARPANET people, because they were comfortable with the protocols they had. They worked and they got their email, and they could do file transfers. You know, who needed this new Internet thing? Of course, this didn’t take into account the proliferation of Local Area networks, such as the Ethernet developed by Metcalfe which drove many decisions in the long run, because a huge number of networks required restructuring of address space and all kinds of other things. As far as the politics goes, in the early stages of almost any of these projects, people are leery of wanting to rely on these new ideas, because they don’t know if they’ll work or not. Yet, if you don’t do something, then you never make any progress at all.

We found it was difficult to sell the idea of people switching over to the new protocols. Consequently, in order to get that to happen, I was at ARPA at the time, I went to the Defense Communications Agency, which is now DISA (Defense Information System Agency), and I said, “I want to shut down the ARPANET for all but the Internet protocols for a day.” This was in the middle of 1982. After some discussion, they agreed to do that.

We essentially turned off the ability of the packet switches to communicate by any means other than TCP/IP. After the first time we did that, we left it in place for a day, which meant a lot of people weren’t sending email, and there was this big hue and cry. Then later in the year, it could have been October or something, we did it again for two days. This really got a lot of attention and a lot of people hollered and screamed. But it was the only way to convince people we were serious.

There were other incidents, like most recently the transformation of what’s been called the Internet Assigned Numbers Authority, or IANA, something that John Postal ran for years, into a separate non-government organization called ICANN, or the Internet Corporation for Assigned Names and Numbers. That whole initiative, which is to extract from the government responsibility to form policies for the assignment of names and numbers in the net, was a very important political move, because it said the government was no longer responsible for this, industry has to do it.

That is where the responsibility should be anyway. At some point, it makes no sense to spend our R and D dollars on something that’s basically an infrastructure. So, there have been very strong politics associated with exactly what does this ICANN do, what authority does it have or not have. There is a lot of debate still going on over that.

Hochfelder:

I myself am a bit uncertain on who actually runs or controls the Internet.

Cerf:

There is no one person, correct.

- Audio File

- MP3 Audio

(Cerf_-_clip_12.mp3)

There are about 300,000 networks around the world that are hooked together on the Net. Many of them get service from upstream suppliers called Internet Service Providers, or backbone Internet Service Providers. As a result, you’ll see a certain hierarchical structure to the Net from the edge towards the core. The core of the network is actually made up of several hundred networks that are in large measure interconnected with each other, either directly or through things called Metropolitan Area Exchanges or other kinds of exchanges. A lot of us, run parts of the Internet, and we have to cooperate with each other in order to make sure it performs properly.

Hochfelder:

What role does the Internet Society play in that cooperation, that coordination?

Cerf:

Internet Society was formed initially to help support the IETF, and it has subsequently also been very active in promoting the spread of Internet in the Third World countries. We do an annual workshop, which graduates about anywhere from 100 to 200 people from the Third World typically. We’ve done this for some years now in a row. That activity is now taking place in more than one place at a time. In Cairo, we often do one of the INET Meeting, annual meeting a week before that, and we also do one in Brazil, or in South America. Last year it was in Brazil. As a result, we’re expecting to see more and more of these kinds of educational training activities happen. We’ve also played a fair role in policy formation by helping to brief lawmakers or provide information that our chapters can use to brief their lawmakers on how Internet works and what rules can be made to function and which one’s don’t make sense because they’re not able to be implemented.

It has been something of a neutral territory for the provision of statistical information about the Net, and it’s intended to try to reach out to virtually everyone in order to help him or her become interested in and able to make use of Internet capability. Most recent activities, I think, have revolved around policies associated with ICANN, because some three years before ICANN was formed, the Internet Society tried to draw people together in order to put a foundation in place to do the Internet assigned numbers and to maintain management in an industrial setting rather than in a setting which is funded by the U.S. government. John Postal was very much involved in trying to do that.

It turned out to be so complicated and the Internet was exponentially growing, and its value was growing. People were beginning to do IPOs of great worth, that the politics of it got to be quite complex. So complex, in fact, that the White House got involved by way of Ira Magaziner, who was at that time senior policy advisor to the President. Ira produced a green paper and a white paper, and was very much involved in mediating among the various parties. Internet Society helped by providing a place where some of these debates and discussions could happen. It took some leadership in trying to bring to closure some of the debates. It was very difficult, that one.

Even now, we are still struggling to get everyone on the same page, because we’re creating many, many more organizations to advise the Internet Corporation for the Assignment of Names and Numbers as it goes forward. As a result, there are plenty of politics there. Plus, there’s politics in the terms of legislative rules. The attempt to control content on the Net, the Communications Decency Act, backfired, as I think it should have.

Exported cryptography is another important area where the Internet Society felt as if the U.S. position was too stifling. Consequently, there are efforts made by the organization to put on the table reasoned descriptions of what the outcomes are of different policy choices. It’s not so much and advocacy thing as it is a thing, which tries to explain clearly to people who have to make policy decisions exactly what the implications are of those decisions.

Hochfelder:

Therefore it’s not partisan and it’s neutral.

Cerf:

It is pro-Internet, but it is not partisan to any particular organization that tries hard to stay very objective.

Hochfelder:

Yes. How does work in the Internet Society compare to a group like, say, the Electronic Frontier Foundation?

Cerf:

EFF has tended focus fairly closely and narrowly on privacy concerns, for example. There is another group called EPIC, which is Electronic Privacy Information Council, or something close to that, which has also had some hard concerns. I know EFF feels very strongly that wherever possible, information should not be taxed. It shouldn’t be costly right now. I think it’s had a mixed experience with that. Sometimes charging people for things is a good way of moderating demand. Sometimes it’s the only way to compensate someone for intellectual property, as you would probably appreciate, coming from IEEE.

So, the idea that information wants to be free of charge, which is a common expression, needs to be moderated a little bit. I think it’s fair to say that EFF, some of EFF members, err in the free information direction rather than the information that’s for sale direction. Personally, I think the network can handle all of the above in many different forms, just because it is capable of being used by an academic. You put information out and make it available to anybody who needs it. It is also suitable for being used to publish information that you need to charge for in order to recover costs. As a result, you’re starting to see manuscripts that are showing up—showing up that you can actually download, a book, for example, you can download. This is in addition to being able to order a book through Amazon.com.

IEEE, IEEE Communications Society

Hochfelder:

Yes, that makes sense. If we could shift gears a little bit and talk about your involvement with the IEEE and the IEEE Communications Society?

Cerf:

It has been interesting and I would say somewhat tenuous relationship. You know, I have been an IEEE member for a long time. That’s partly out of a belief that that is one of the principal engineering organizations, professional organizations, and you can’t really claim to be an engineer unless you participate. However, I have not been as active a member there as I have been in ACM or in Internet Society. That doesn’t mean that I have any less strong feeling for it. It’s just I only have a finite amount of time.

- Audio File

- MP3 Audio

(Cerf_-_clip_13.mp3)

The IEEE has done some really good things though. First of all, its publications are very good. I still subscribe to a number of them, in addition to Communications Transactions and Communications Magazine. It’s also done some very interesting work on standardization with the 802 series of standards. That has been an important contribution. I think, on occasion, the Communications Society in particular, has been very helpful in formulating policy, or at least explaining what the policy implications are of various technical choices are to members of Congress. Also, the conferences that they put on are almost invariably very interesting and useful. But I’m not taking part as an officer or anything like that in the Communications Society. I sort of pay my dues and enjoy the resulting publications.

Impact of the Internet

Hochfelder:

To shift gears once more, I’m very fascinated by what you’ve said about the politics of the Internet. As I said before the tape started running, I get the sense that we’re living through a revolution, and we’re not quite clear what it’s going to look like in 20 years. I wondering if you could just talk a bit about the social, economic and legal effects/impacts you think the Internet already has had, and what potential future impact the Internet will have on our lives.

Cerf:

- Audio File

- MP3 Audio

(Cerf_-_clip_14.mp3)

It is very clear to me that it’s going to have enormous economic impact. I think that you will see that the Internet will carry all forms of media, whether its telephony or television or radio. It doesn’t mean that the other forms will disappear. It just means Internet will be there to augment them, and in ways that the existing gear doesn’t necessarily accommodate. Being able to take information and run it through programs, interpret it and add the software, to add ancillary information to a television broadcast. To use this information to search an archive, to find a video clip because you’ve got a captioned source in there some place. All is very well within the reach of Internet technology in my estimation.

It is plainly having impact on the business world. Electronic Commerce is the current buzzword. My guess is that we’ll be carrying ten percent of the world’s economy on the Internet and as Internet transactions by 2003. There will be about three point two trillion dollars a year of value being exchanged on the Internet in just a few years time.

Education is likely to be transformed dramatically by Internet. It already has been in some respects. People did research differently than they do today. They rely on shared databases that they didn’t have access to before. When they’re searching for material, source material, they often go on the Net first before they turn to a library of books. I think that the travel industry is going to be a major beneficiary of Internet because people will discover each other and things that they’re interested in doing that they didn’t know about before, and they will travel to do that. I’ve seen plenty of evidence of that.

- Audio File

- MP3 Audio

(Cerf_-_clip_15.mp3)

I think you’ll find a rather interesting social phenomenon, in a sense, in that there will be simultaneously the preservation of cultural information and values by suitable web sites and things like that, but also a kind of homogenization in the sense that many, many people will learn English as a second language, and will use it for business purposes and other communication. This isn’t to say that the other languages will be neglected. You already know that there are many sites on the Net that are devoted to Russian and German and French and Spanish and Japanese and so on. Therefore, I think that we will, in fact, enrich our cultural environment with this capability.

I know that I find myself just browsing through the Net, astonished at what is available and what could be found. The quality is often very good, even though occasionally the quality is terrible. There isn’t any guarantee that just because it’s on the Net means it’s well thought through or properly bedded. But you know the old story about the remedy for bad information is more information. And as a result, the solution to that problem is to put better information on the Net. The art of editing will not go away, will still be valuable even if we do it electronically instead of on paper.

As far as kids are concerned, I’m seeing the younger generation completely taken by this technology. They’ve absorbed it. There’s no question. They have absorbed this stuff. It’s their network. When you talk to them, they say, “Don’t screw it up. It’s our Net.” Consequently, I see very positive side effects.

- Audio File

- MP3 Audio

(Cerf_-_clip_16.mp3)

There are some risks that we have got to overcome. One of them is that with all of these shared databases, there’s bound to be personal information that we didn’t want to have released that would be there, if we can’t do something about encrypting the data. There are questions about intellectual property protection, copyright, and trademark. And you have material that gets duplicated through the Net. There are questions of taxation, whether and how any transactions on the Net should be taxed. You can see that there is a very long list, and as time goes on, we will collectively be confronted to find solutions to those questions.

- Audio File

- MP3 Audio

(Cerf_-_clip_17.mp3)

I didn’t mention one other thing, which is likely to happen. Where today there are about 50 million devices on the Internet, my projections are that there will be 900 million devices on the Net, almost a billion, by about the year 2007. A lot of them won’t be classical desktops, laptops, and so on, but it‘ll be personal digital assistants. It’ll be cell phones. It’ll be web television sets. It’ll be appliances around the house. Mark Wieser’s notion of ubiquitous computing environment is really going to happen. It’ll be fully networked, so we need to learn how to adjust to that kind of a world where many, many devices are a part of the Network. Also, we can reasonably expect interaction indirectly.

Hochfelder:

Is this similar to digital convergence that we’ve been hearing about for the past few years?

Cerf:

In some respects. Although, there’s more than a convergence going on, because what we’re doing is Internet-enabling a great many appliances that normally didn’t have Internet capability at all.

Hochfelder:

Such as?

Cerf:

The television set, the washing machine, the refrigerator. You may wind up having scanners that can detect the bar codes when you put things in your refrigerator, so it keeps track of what’s in the refrigerator. When it’s time to go shopping, you pull up a web page and click on a chart that says here’s all the stuff that you bought. Which ones do you want this time? And there are all kinds of possibilities here. You can even turn a box of soap into a service, by selling somebody some soap. Then when they have a garment that’s been badly stained or something, they can go on a web site, find out how to use the soap in order to wash the garment. Then use the Net to set the washing machine up with the proper cycles and everything, to use the soap to get rid of that particular stain.

Your cars will be Internet-enabled so you’ll know where you are, because it’ll have GPS. It will have access to geographically indexed databases so it can answer the question where is the nearest Thai restaurant—a very important question. Where is the nearest ATM machine? All those things make sense if you can ask the question from a geographically indexed point of view. But you have to know where you are, and you have to be on the Net in order to make use of that capability.

Hochfelder:

What is the likely timeframe for these sorts of innovations?

Cerf:

It’s starting to happen now. We’re starting to see personal digital assistants that are Internet-enabled. Telephones, cell phones that are Internet-enabled, two-way pagers like the one I’m wearing now, which can send and receive email. Some of these are actually now being used not only to send and receive email, but to place orders on the stock market and get confirmation back. So, these things will simply accrete with time as people come up with new ways of using Internet enabled devices as part of the Internet.

Hochfelder:

In the next decade or so will we see a lot more changes?

Cerf:

- Audio File

- MP3 Audio

(Cerf_-_clip_18.mp3)

Yes. Your glasses may actually become reading devices, in the more technical sense of the word, in a computer sense of the word, so that you’ll put your glasses on and it will project into your retina an image, which you’ll be the only one who sees it. It’ll be a virtual image, because no one else could see because they don’t have the same glasses on. It will not be a question of wearing 3-D or anything. It’s actually a question of sending data to the glasses and having the glasses generate the image for you, but projected into your retina. That gives you privacy, for example. We have all kinds of concerns about privacy and confidentiality on the Net, so cryptography is going to be a very big, important part of the Network as time goes on. More important than it is now.

Hochfelder:

Do you have any last thoughts to add?

Cerf:

- Audio File

- MP3 Audio

(Cerf_-_clip_19.mp3)

Only that revolutions like this don’t come along very often. They have consequences that we can’t necessarily see. Therefore, it would not hurt for us to be constantly on the look out for what the implications are, be looking back and asking ourselves what’s happened over the last ten years. And is it good, bad, or indifferent?

I’m an eternal optimist, and I think this revolution is going to be one that will be beneficial in the long run. It’s already opened a dialogue among people on the planet whose voices never would have been heard, who might never have met each other or discussed a particular topic. This medium now allows them to do that. It’s not like a telephone call, where you sort of have to know who you’re calling. In the Internet, you don’t necessarily know, because you’re sending a newsgroup item or sending email through distribution lists. You find each other in chat rooms. You end up in common places on the Net. Those are all amazingly powerful tools for stimulating human discourse. Frankly, it’s my hope that with that increased level of discussion and debate will help us manage what is obviously a very complex global society.